General

Sentinel Hub forum is the best place to get in touch with support staff as well as other Sentinel Hub

You can find Quick tutorial on Sentinel Hub here.

We also recommend a short course on the fundementals of remote sensing with a comprehensive overview of common use cases and tools. If you want to dive a little deeper into the topics of Sentinel Hub, you can find our webinars here.

You can find the Sentinel Hub forum here. Select your category of interest and you might find your question already answered. If not, ask and our team or other users will help you find a solution.

To learn more about the licesing, please check our Terms of Use - Sentinel Hub Collection Specific Additional Terms.

You can cite our applications / web pages in the following way (link is optional):

- EO Browser, https://apps.sentinel-hub.com/eo-browser/, Sinergise Solutions d.o.o., a Planet Labs company

- Sentinel Hub, https://www.sentinel-hub.com, Sinergise Solutions d.o.o., a Planet Labs company

- “Blog title”, Url, Sentinel Hub, Sinergise Solutions d.o.o., a Planet Labs company

When referring to Sentinel data acquired through the Sentinel Hub services, please use the following credit:

- “Modified Copernicus Sentinel data [Year]/Sentinel Hub”

See also more about collection specific terms and conditions.

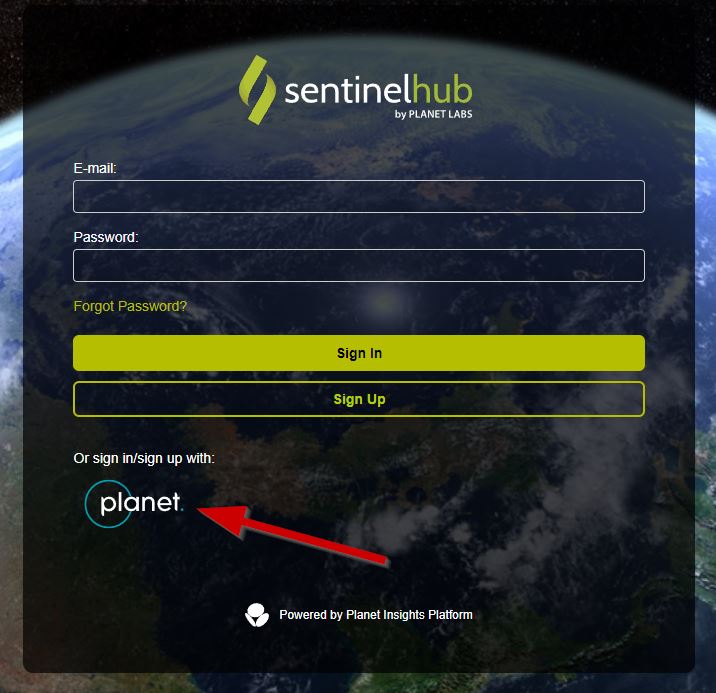

Before logging in, ensure that your Planet account is linked with your Sentinel Hub account. If you require support to link Sentinel Hub account with Planet account, please visit Planet’s support page here.

To login into Sentinel Hub with your Planet credentials, follow these steps:

- If your accounts are already linked:

- Go to the Sentinel Hub sign-in page.

- Select the “Sign in with Planet” option.

- Enter your Planet credentials when prompted.

- Success! You are now logged in to Sentinel Hub.

- If you have a Sentinel Hub account which was created for you:

- You need to set up your Sentinel Hub credentials first.

- Click on the “Forgot Password?” link on the sign-in page.

- Follow the instructions to reset your password.

- Once you have set up your Sentinel Hub credentials, you can proceed to link your accounts or log in as described above.

- If your accounts are not yet linked and you want to log in using your Planet credentials:

- Click on “Sign in with Planet” on the Sentinel Hub sign-in page.

- Provide your Planet credentials.

- The system will recognize that your Planet account is not linked with a Sentinel Hub account and will prompt you to provide your Sentinel Hub credentials to link the accounts.

- If you do not have Sentinel Hub credentials, click on the “Forgot Password?” link on the sign-in page to set them up.

In case you encounter any issues during this process, please do not hesitate to contact our support team support@planet.com.

If you require support to link Sentinel Hub account with Planet account, please visit Planet’s support page here.

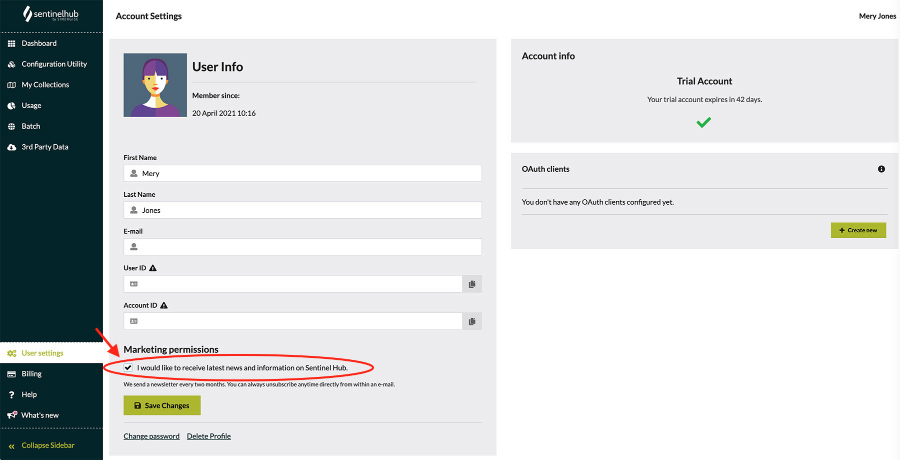

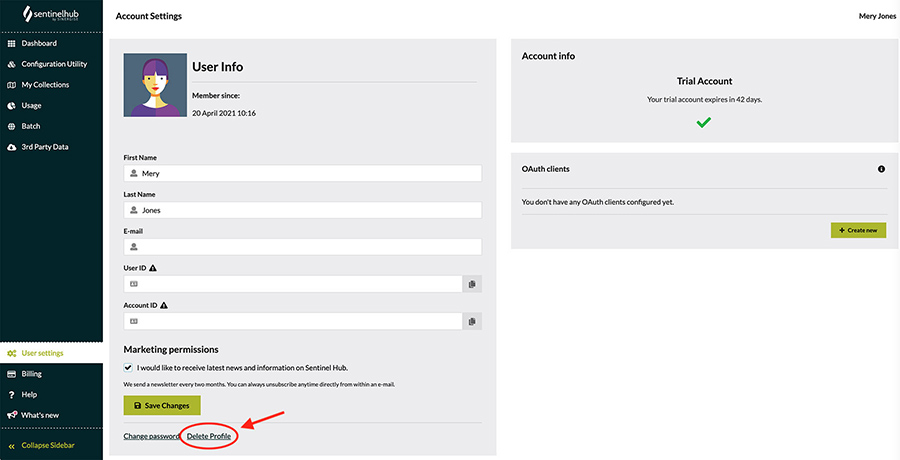

To delete your profile and remove your personal information from our web page, go to the Sentinel Hub Dashborad. Under the “User settings” tab click on “Delete profile” button and confirm your decision by clicking one more time on “Yes, delete profile” button in the pop-up window.

OAuth clients have expiration dates to enhance security and reduce risks. They ensure regular credential rotation, prevent unauthorized access, and follow security best practices. This helps protect your applications from potential security breaches.

Yes, you can create OAuth clients that don’t expire. When registering a new OAuth client in the Dashboard, you can select the “Never expire” option to make your OAuth client indefinite. For more details on the security implications of choosing this option, please refer to the FAQ: Why do OAuth clients have an expiration date?

Subscription, Packages and Pricing

Our subscription plans are described here: https://www.sentinel-hub.com/pricing/

The difference between consumer / research and commercial plan is in the purpose of the usage of our services. If you are using the Sentinel Hub services for research purposes or for yourself, consumer / research option is the right one. If you are using it within your company, you should use commercial option.

| Mission | Product | Resolution [m] | km2 for one PU | € per 1 km2 |

|---|---|---|---|---|

| Sentinel-2 | True color | 10 | 26.2 | 0.00006 |

| NDVI | 10 | 39.3 | 0.00004 | |

| All 13 bands | 10 | 6.0 | 0.00025 | |

| Sentinel-1 | VV gamma0 ortho | 20 | 157.3 | 0.00001 |

| Sentinel-3 OCI | True color | 300 | 23 593.0 | 0.00000007 |

| OTCI | 300 | 35 389.4 | 0.00000004 | |

| Landsat-8 | True color - pansharpened | 15 | 44.2 | 0.00003 |

| NDVI | 30 | 59.0 | 0.00003 |

If you require support with Sentinel Hub access as a Planet customer, please visit Planet’s support page here.

Each request is worth a certain amount of processing units. If we only focus on rate limiting, the subscription package you need depends on how many processing units (PU) and requests per minute or month you intend to use. This in turn depends on how large your images are in pixels, how many of them you order, how many bands you order, the format used and whether some more complex processing options are enabled.

See documentation on how processing units are defined and calculated here. The following are detailed calculation examples for Sentinel-1, exploring three different scenarios, including a simple 1 image request, many small requests, long time ranges and large area processing.

Example 1

1 request that outputs an image 2000 x 2000 px large, with 2 bands ordered, 16 bit tiff format and orthorectification applied, would result in 20,34 PUs used. See the multiplication factors and the calculation below:

| Parameter | Your parameters | Multiplication factor |

|---|---|---|

| Output image size (width x height) | 2000 x 2000 = 4 000 000 | / (512 * 512) |

| Number of input bands | 2 | / 3 |

| Output format | 16 bit INT/UINT | x1 |

| Number of data samples per pixel | 1 | x1 |

| Orthorectification | Yes | x2 |

The calculation: (4 000 000 / 262 144) x (2 / 3) x 1 x 1 x 2 = 20,34 PU per request;

If you wanted to get 500 images (e.g. for time series), you would need to create 500 separate processing requests. If parameters for each image are equal as above, we can simply multiply our calculation with 500 and see, that 500 similar requests would use about 10 172 PU in sum.

The calculation: 20,34 PU x 500 = ~10 172 PU per 500 requests

If you look at pricing, you will see that the Exploration package is enough for 10 172 PUs, as it gives you 30 000 PU per month.

If your output image would be smaller, e.g. 500 x 500 px large, the cost of a single request would be 1.27 PU. Multiplied by 500, that’s just 635,78 PU in total, so much less. On the other hand, if you wanted an image with quite a large output of 5000 x 5000 px, a request would cost you 127.15 PU, and 500 of these would cost ~63 578 PU, which you might think would be covered by the Basic account, as it offers up to 70 000 PUs per month. However, processing API is limited to output images with width and height to up to 2500 px, so setting it to 5000 would result in an error. To output images this large, you would need an Enterprise account to use batch processing API. You will notice that batch processing divides your PU cost by 3, as it’s only a third of the price of processing API. So your 500 requests would cost 63 578 / 3 = 21 192 PU.

Example 2

If you wanted to create a single request that uses 500 scenes in a multi-temporal script, where you e.g. calculate max NDVI over several years, using up 500 acquisitions, your request would also use 10 172 PU, just like our request for 2000 px x 2000 px output in Example 1 (given that all the other parameters are the same as in Example 1).

| Parameter | Your parameters | Multiplication factor |

|---|---|---|

| Output image size (width x height) | 2000 x 2000 = 4 000 000 | / (512 * 512) |

| Number of input bands | 2 | / 3 |

| Output format | 16 bit INT/UINT | x1 |

| Number of data samples per pixel | 500 | x500 |

| Orthorectification | Yes | x2 |

The calculation: (4 000 000 / 262 144) x (2 / 3) x 1 x 500 x 2 = 10 172 PU

The issue here is, that you would use more than 2 000 PU in a single minute, as it’s just one request, and use this heavy isn’t supported by any package. Each package specifies how many PUs and requests you can use per month, as well as per minute. Your request would time out or fail. If you’re interested in very large time ranges, it’s best to use batch processing API.

Example 3

Let’s suppose you want to order an orthorectified 16-bit Sentinel-1 image with 2 bands for the whole Australia in full resolution, which let’s say is covered with a 4000 x 4000 km bbox, which equals to 4 million meters x 4 million meters. As Sentinel-1 resolution is 10 meters, we know that our output image will have to be 400 000 pixels x 400 000 pixels large.

| Parameter | Your parameters | Multiplication factor |

|---|---|---|

| Output image size (width x height) | 400 000 x 400 000 = 160 000 000 000 | / (512 * 512) |

| Number of input bands | 2 | / 3 |

| Output format | 16 bit INT/UINT | x1 |

| Number of data samples per pixel | 1 | x1 |

| Orthorectification | Yes | x2 |

The calculation: (160 000 000 000 / 262 144) x (2 / 3) x 1 x 1 x 2 = 813 802 PU.

Processing API is limited to 2500 pixels for output width and height, so you would have to run this request with batch processing API. To do so, you would need to have an Enterprise account. As batch processing is cheaper, your batch processing request would use 813 802 / 3 = 271 267 PU. You can see that Enterprise S would be sufficient for this, as it offers 400 000 PU per month. And because batch processing is asynchronous and takes a while to ingest the tiles, you won’t be stopped by per minute rate limiting for a single request.

In general, for smaller requests, even if you need many, Exploration and Basic should suffice. Note that Exploration account doesn’t support commercial use. If you need large requests, such as the whole countries or continents, or if you want longer time periods, you will need an Enterprise account to use batch processing. Custom options are also available, if you need heavier use than the packages allow.

VAT will be charged if you are purchasing our services as:

- not VAT registered company in the EU

- an individual from the EU

VAT won’t be charged if you are purchasing our services as:

- VAT registered company in the EU

- company outside the EU

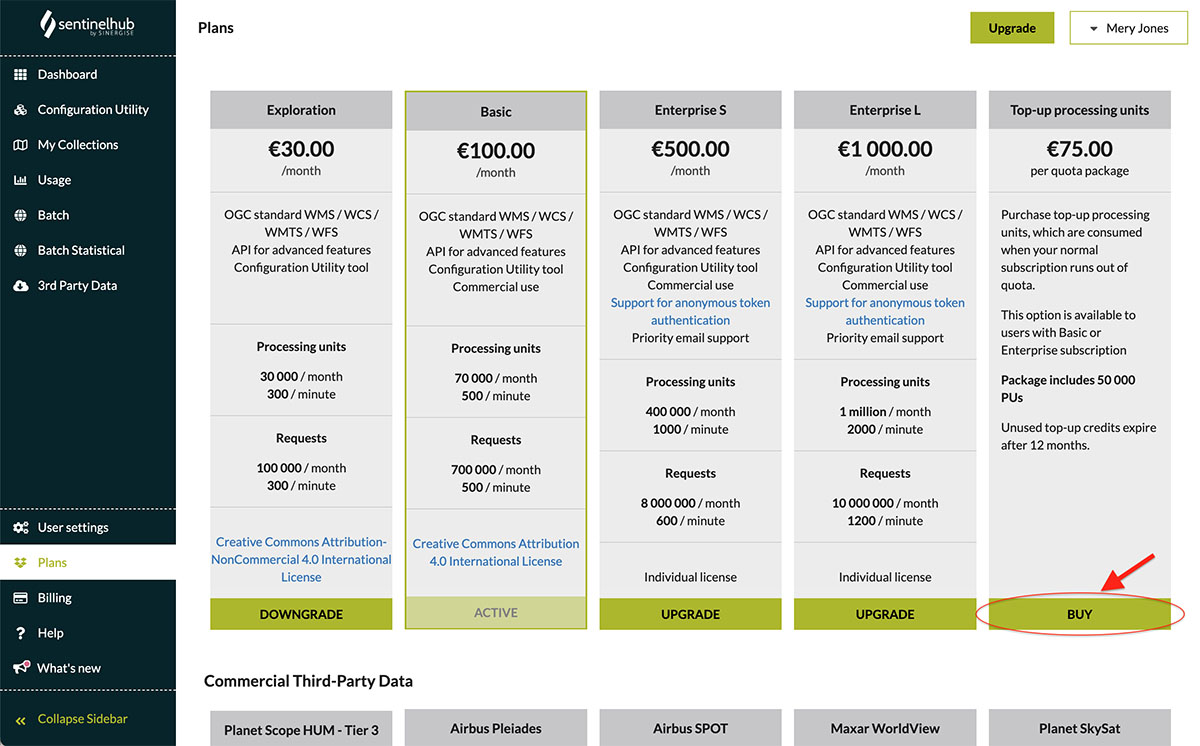

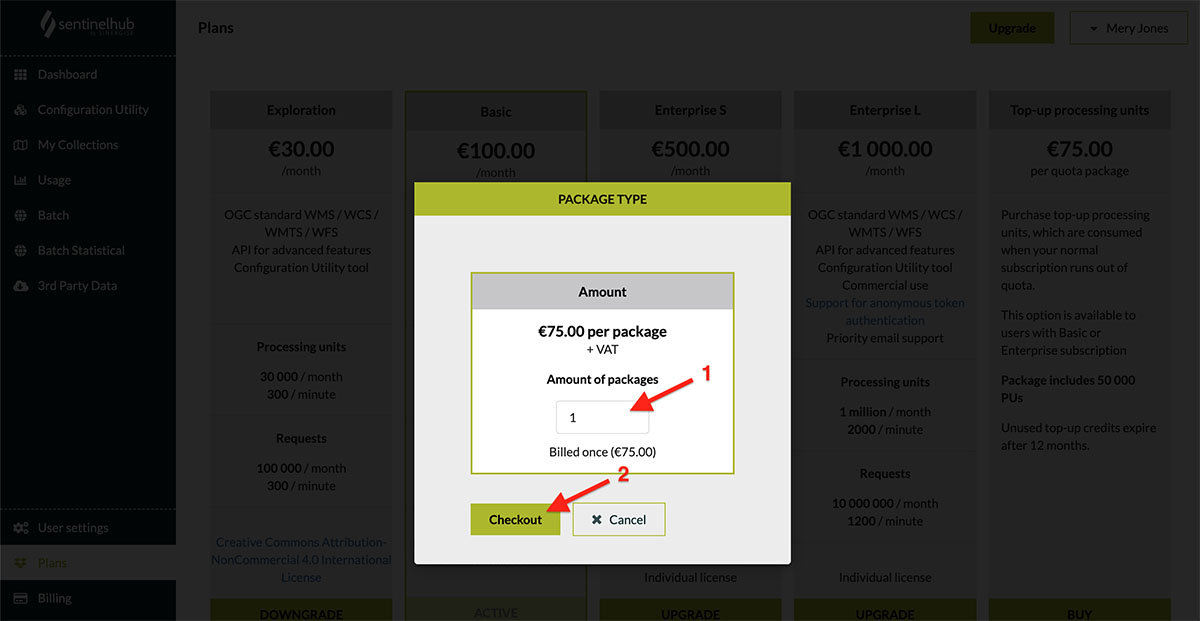

If you run out of processing units or requests in the middle of the month and want to continue using the services before the start of the next month, we recommend that you purchase the Top-up processing units package. These processing units/requests are consumed when your processing units/requests from the normal subscription run out. The unused processing units/requests from the Top-up processing units package are carried over to the next month and they expire after 12 months.

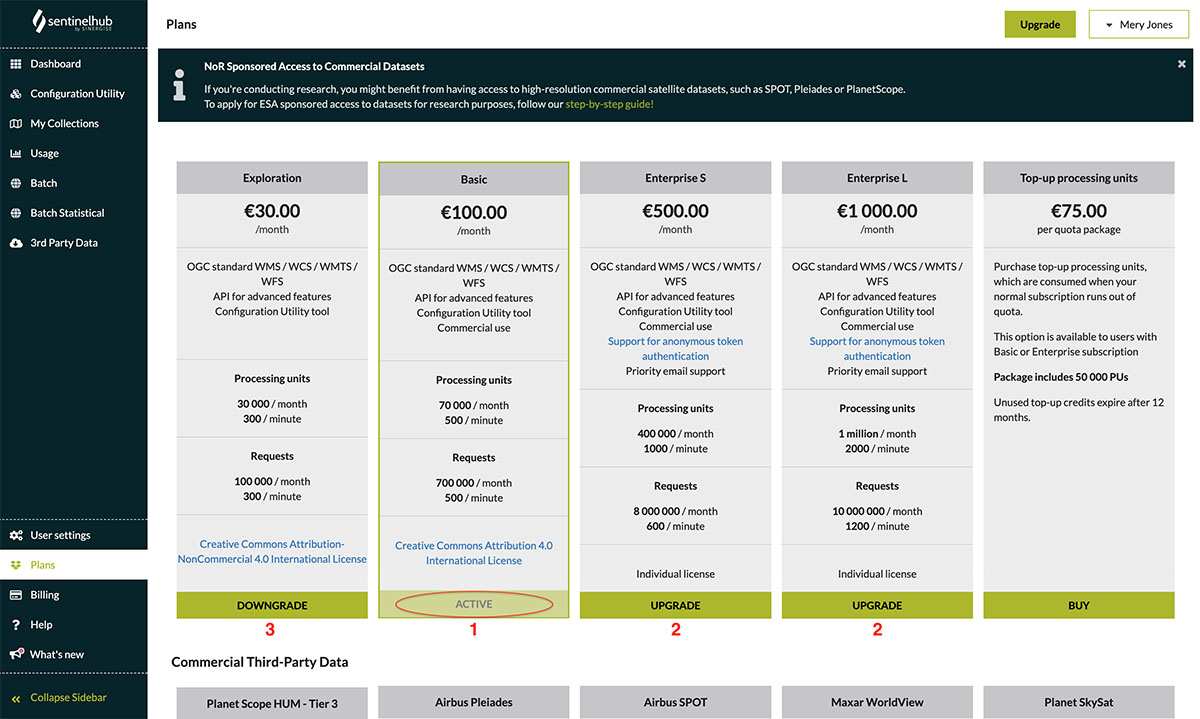

You can make the purchase in the Sentinel Hub Dasboard under the Plans tab.

A package contains 50,000 processing units and 100,000 requests. If you need more processing units or requests, you can select more packages (1) before you check out (2).

As stated on our pricing page, the above applies to all users with a Basic or Enterprise subscription.

Yes. You can go to the Dashboard and choose a recurrent subscription option.

This gives you an option to set-up automatically recurrent payment by PayPal or credit card, which you can cancel at any time.

Generally we advise to subscribe for an annual package as it comes with a discount. Annual packages are not automatically renewed after one year. We will send you a renewal reminder one week before the expiry date.

If you wish to subscribe to Sentinel Hub for one month only, go to the Sentinel Hub Dashboard under the Plans tab, select the plan you wish to subscribe to and select the recurring subscription option. Follow the guided purchase process in the Sentinel Hub Dashboard and conclude your purchase. Then cancel your subscription as described here.

In order for the subscription to be valid for only one month, you must cancel it within 30 days from the day you set up the monthly subscription.

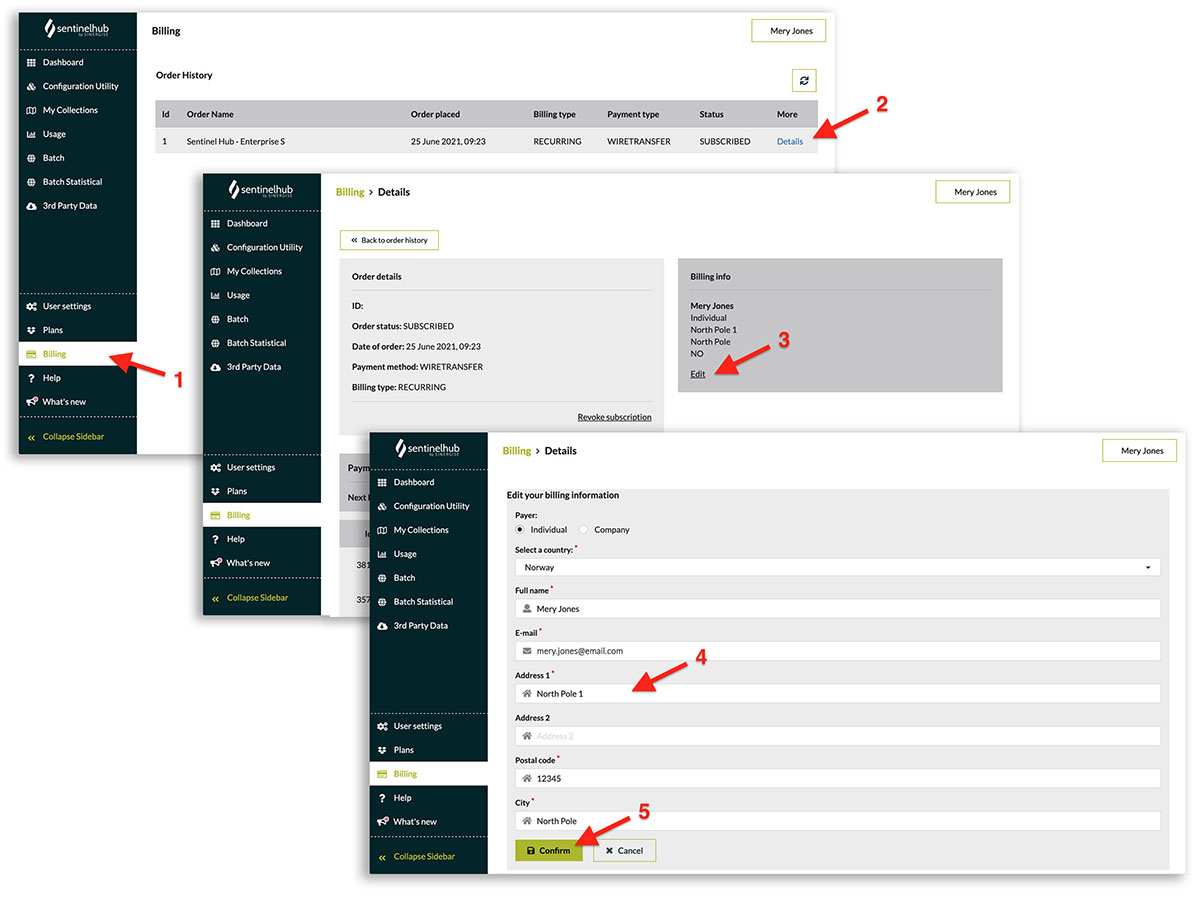

To change your payment method, two steps are needed:

- You have to revoke existing subscription, which you can do in the Sentinel Hub Dashboard under the Billing tab / Order History. More details on how to revoke a subscription are described here. Your account will remain active until one month after the last payment.

- Once you have received an email confirmation from Sentinel Hub that your subscription has been cancelled, and before the end of the billing period of your cancelled subscription, set up a new subscription with your new preferred payment method in the Sentinel Hub Dasboard under the Plans tab.

If you only need to change the credit card details of your monthly subscription paid by credit card, please follow the steps described here.

To change your credit card details, please log in to the Sentinel Hub Dashboard and follow these steps:

- Go to the Billing tab.

- Click on the “Details” link for your current monthly subscription.

- Select the “Change credit card details” link displayed in the “Order details” box.

- Enter the new credit card details (card number, expiry date and CVC/CVV).

- Confirm by clicking on the “Submit” button.

- If you have entered valid credit card details, a pop-up window will appear with a confirmation message.

To change your credit card details, please log in to the Sentinel Hub Dashboard and follow these steps:

- Go to the Billing tab.

- Click on the “Details” link of your active subscription.

- Click on the “Edit” on the billing info (top right).

- Change your address.

- Confirm the change by clicking on the “Confirm” button.

You can cancel your subscription to the Sentinel Hub services in the Sentinel Hub Dashboard. The link will take you to the order history list. Click on the link to “Details” of your subscription you would like to cancel. Click on the “Revoke subscription” button and confirm your choice. Your status will be changed to “revoked” and subscription canceled.

If you decide to cancel, you’ll be able to access Sentinel Hub’s premium features until the end of your current billing cycle. We don’t issue refunds.

To convert your subscription from a monthly to an annual subscription (which comes with a 17% discount), you must first cancel your existing subscription to Sentinel Hub services. Follow the steps described here to cancel your subscription.

Just before the end of the billing period of your cancelled subscription, set up a new subscription with an annual option in the Sentinel Hub Dasboard under the Plans tab.

Follow the same steps if you want to switch from an annual subscription to a monthly subscription.

You can change your plan in the Sentinel Hub Dasboard under the Plans tab. If you have an active plan you will see it marked as “Active” (1).

To upgrade your plan, select the “Upgrade” button under the desired plan (2). To downgrade your plan, select the “Downgrade” button under the desired plan (3). In both cases, follow the guided purchase process in the Sentinel Hub Dashboard.

If you previously had a monthly subscription

Once a new subscription is set up for your upgraded or downgraded plan, your previous subscription will automatically be cancelled. It’ll take up to 5 minutes for the system to make the changes visible in your Sentinel Hub account. Please note that you’ll then need to log out and log back in to see the changes.

If you previously had an annual plan

Your annual plan won’t automatically renew, so no cancellation is required.

No price plan is activated in a trial stage.

Please note that Sentinel Hub trial account is not allowed for commercial use. After 30 days, you can choose the subscription service you want and subscribe. There is no automatic payment.

The differences in functionality or data between the trial account and the subscription account:

- The trial account is limited to 30,000 requests and 300 processing units per minute, while subscription accounts have higher limits. See our pricing packages for details.

- The trial account does not provide access to TPDI functionality (commercial data). For this, you need one of the subscription accounts.

- The trial account does not provide access to the batch API. For this, you need one of the Enterprise packages.

If you need higher throughput during the trial period, contact us.

The trial use is completely free and there will be no costs associated to it whatsoever. You do not need to cancel your account after the trial period expires, and there are no automatic charges.

After 30 days of the trial period:

- The access to OGC services will expire, but you will still be able to use EO Browser for non-commercial purpose.

- To continue using OGC services you will have the ability to subscribe to one of the plans directly in the Sentinel Hub Dashboard.

We do not charge anything automatically, nor do we extend a subscription period automatically. A week before the end of your annual plan you will be notified about incoming event and asked, whether you would like to extend the subscription. In case your decide for an extension, you will be asked for additional payment. In the opposite case, account will be frozen for one month period and deleted afterwards.

If you decide for extending the subscription, go to the Sentinel Hub Dasboard under the Plans tab and choose one of the plans.

This gives you an option to set-up automatically recurring payment by PayPal or credit card, which you can cancel at any time.

Yes! There are ESA sponsored accounts available. Visit our website on the Network of Resources for a step-by-step guide on how to apply!

Check also other Planet Science Programs available to researchers and students to utilize the high spatial and temporal resolution imagery for high impact science.

A definition of a processing unit is avaliable in our documentation. The rules with examples of how to calculate the number of processing units for a request are also provided.

The processing units and requests included in your Sentinel Hub plan are reset on the first day in a month. The unused processing units and requests are not carried over to the next month. If you run out of processing units and/or requests before the end of the month, we recommend you to purchase the Top-up processing units package as described here.

More details about the processing units can be found in our documentation.

Available EO Data

Sentinel-1 - global coverage, full GRD archive

Sentinel-2 - global coverage, full archive

Sentinel-3 - global coverage, full OLCI archive

Sentinel-5P- global coverage

Landsat 1-5 MSS L1

Landsat 4-5 TM - global, Level-1 from August 1982 to May 2012, Level-2 from July 1984 to May 2012

Landsat 7 ETM+ - global, since April 1999

Landsat 8 - Since February 2013

Landsat 9 - Since February 2013

Landsat 5, 7 and 8 - ESA Archive - Europe and North Africa, full archive

ENVISAT MERIS - global coverage, full archive

MODIS - Terra and Aqua, since January 2013

Digital Elevation Model – DEM - a static data collection based on MapZen’s DEM updates

Copernicus Services

Proba-V - Since October 2013

GIBS - Global Imagery Browse Services

For more details check also our list of available data collections on our web page and our Sentinel Hub Collections.

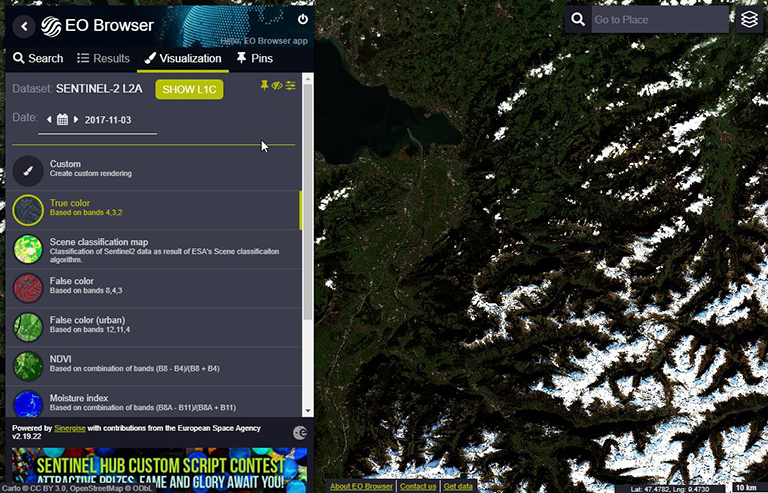

To explore availability, please check EO Browser.

To browse various data, go to EO Browser. Read about how to use it here.

We have our services installed on several places to get fastest access to the data. This is why there are different access points for different data collections. To learn, which data collections are available at which places, visit our page on data collections or our documentation for more details.

We put a lot of effort to make data available as soon as possible. However, we depend almost entirely on how fast the data provider can process and disseminate the data.

For Sentinel products, ESA usually makes them available on their OpenHub in 6-12 hours (target timing is 3-6 hours, sometimes it takes longer, even more than one day). We instantly pull data down but as it takes some time to download complete data, it might take up to one additional hour before they are available on Sentinel Hub.

The best way to get information about all the available scenes in a specific area is to use our Catalog service API, which will give you detailed geospatial information for each tile, that you can control by specifying fields, limits and other properties. For this specific use case, it is most useful to use the distinct parameter with date, which will return a list of the available dates for your requested BBOX and time range. To make this Catalog API request, import the following CURL request to Postman. You will need to authenticate the request with a token.

curl -X POST 'services.sentinel-hub.com/api/v1/catalog/search' \

-header 'Authorization: Bearer <your access token>' \

-header 'Content-Type: application/json' \

-data-raw '{

"bbox": [13,45,14,46],

"datetime": "2019-12-10T00:00:00Z/2020-12-15T00:00:00Z",

"collections": ["sentinel-1-grd"],

"limit": 50,

"distinct": "date"

}'

In the curl request above, the first line is the URL for the request, that you only need to change if you’re using data collections on different deployments. The first header is where you add your access token; if you authenticate the collection or the request in Postman, the token will be automatically added. The second header is where you specify the desired output, which in this case, is a JSON file.

In data-raw, you will specify the bbox, time range (datetime), data collection (collections), limit (the upper limit of the number of results), and with the line "distinct":"date", you will limit your results to only include information on the acquisition date. Consult the Catalog API reference to learn more about the available parameters.

The result for the CURL request above will look like this:

{

"type": "FeatureCollection",

"features": [

"2019-12-10",

"2019-12-11",

"2019-12-12",

"2019-12-15"

],

"links": [

{

"href": "http://services.sentinel-hub.com/api/v1/catalog/search",

"rel": "self",

"type": "application/json"

}

],

"context": {

"limit": 50,

"returned": 4

}

}

At the beginning of the result, you can see all the available dates listed for your request.

It’s also possible to search the available scenes using OGC WFS request, which might be a bit easier to use, but gives you much less search control.

To make a WFS request, you will need to add your INSTANCE_ID, specify the bbox, timerange (TIME), data collection (TYPENAMES), coordinate system (srsName) and a request type. Read more on the parameters here.

An example WFS request would look like this:

https://services.sentinel-hub.com/ogc/wfs/INSTANCE_ID?REQUEST=GetFeature&srsName=EPSG:3857&TYPENAMES=DSS2&BBOX=3238005,5039853,3244050,5045897&TIME=2019-02-11/2019-02-12

You can test the WFS examples with different parameters and inspect the results instantly here.

Note that you can also use MAXCC parameter (maximum cloud coverage) in this call to filter for cloudless data. Use FEATURE_OFFSET parameter to control the starting point within the returned features and MAXFEATURES parameter - the maximum number of parameter of features to be returned by a single request.

As a result you will get a list of all the available scenes for the chosen location in JSON format (or XML if set so). Some of the dates may be duplicated if there are two scenes available in the area. You should simply ignore these duplications.

In Sentinel Hub, the calculated and output values depend on what users specify in their evalscripts (or custom scripts). By calculated values we are referring to the values that are returned from the evaluatePixel() function or from a simple script. Output values are values returned from Sentinel Hub, after the calculated values go through formatting defined by sampleType. In the evalscript, calculated and output values are controlled by:

- In the

setup()function, the requestedbandsandunitsdefine what values are used as input for the calculation (in simple scripts, default units are used). For example, if Sentinel–2 band B04 is requested inREFLECTANCE, the input values will be in the range 0–1. If Sentinel–2 band B04 is requested inDN(digital numbers), the input values for the calculation will be in the range 0–10000. Typical value ranges can be found in our data documentation, chapter Units for each data collection. - The

evaluatePixel()function defines the actual calculation (in simple scripts, the entire script is its equivalent). Sentinel Hub uses double precision for all calculations and rounds only the final calculated values before they are outputted. - The value of the

sampleTypeparameter in thesetup()function define the format of the output values. Possible values areAUTO,UINT8,UINT16andFLOAT32. See our sampleType documentation for more details. When thesampleTypeis not specified (e.g. in simple scripts), the default valueAUTOwill be used.sampleType.AUTOtakes calculated values from the interval 0–1 and stretch them to 0– 255. If your calculated values are not in the range 0–1, make sure you either scale them to this range in theevaluatePixel()function or specify anothersampleType.

Example 1: NDVI

In this example, we want to output values of the NDVI index, calculated based on Sentinel–2 data. Our evaluatePixel() function is:

function evaluatePixel(sample) {

let NDVI = (sample.B08 – sample.B04)/( sample.B08 + sample.B04)

return [NDVI]

}

The requested units in this example do not have any influence on the calculated values of the NDVI. The output values returned by Sentinel Hub (black values in the table) for different sampleTypes are:

| Calculated Value | sampleType.AUTO | sampleType.UINT8 | sampleType.UINT16 | sampleType.FLOAT32 |

|---|---|---|---|---|

| -1 | 0 | 0 | 0 | -1 |

| 0 | 0 | 0 | 0 | 0 |

| 0.25 | 64 | 0 | 0 | 0.25 |

| 1 | 255 | 1 | 1 | 1 |

Use sampleType:“FLOAT32” to return full floating -1 to 1 values. See the example here.

If you do not need values, but a vizualization, you can use sampleType:“AUTO”, but make sure to either:

- map the NDVI values to the 0–1 interval in the

evaluatePixel()function, e.g.:function evaluatePixel(sample) { let NDVI = (sample.B08 – sample.B04)/( sample.B08 + sample.B04) return [(NDVI+1)/2] } - use a visualizer or a color visualization function, e.g. valueInterpolate.

Example 2: Sentinel–2 band B04

In this example, we want to output raw values of Sentinel–2 band 4. Our evaluatePixel() function looks like this:

function evaluatePixel(sample) {

return [sample.B04]

}

If we request units: “REFLECTANCE”, the output values returned by Sentinel Hub (black values in the table) for different sampleTypes are:

| Calculated Value | sampleType.AUTO | sampleType.UINT8 | sampleType.UINT16 | sampleType.FLOAT32 |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0.25 | 64 | 0 | 0 | 0.25 |

| 0.5 | 128 | 1 | 1 | 0.5 |

| 1 | 255 | 1 | 1 | 1 |

| 1.05 | 255 | 1 | 1 | 1.05 |

If we request units: “DN”, the output values returned by Sentinel Hub (black values in the table) for different sampleTypes are:

| Calculated Value | sampleType.AUTO | sampleType.UINT8 | sampleType.UINT16 | sampleType.FLOAT32 |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 2500 | 255 | 255 | 2500 | 2500 |

| 5000 | 255 | 255 | 5000 | 5000 |

| 10000 | 255 | 255 | 10000 | 10000 |

| 10500 | 255 | 255 | 10500 | 10500 |

Example 3: Brightness Temperature Bands

Here we output a Sentinel–3 SLSTR band F1 with typical values between 250–320 representing brightness temperature in Kelvin. The evaluatePixel() function is:

function evaluatePixel(sample) {

return [sample.F1]

}

The output values returned by Sentinel Hub (black values in the table) for different sampleTypes are:

| Calculated Value | sampleType.AUTO | sampleType.UINT8 | sampleType.UINT16 | sampleType.FLOAT32 |

|---|---|---|---|---|

| 250 | 255 | 250 | 250 | 250 |

| 255 | 255 | 255 | 255 | 255 |

| 275.3 | 255 | 255 | 275 | 275.3 |

| 320 | 255 | 255 | 320 | 320 |

Use sampleType:“FLOAT32” to return original values. If integer values are still acceptable for your application, use sampleType:“UINT16”.

If you do not need values but a vizualization, you can use sampleType:“AUTO”, but make sure to either:

- map the values to the 0–1 interval in the

evaluatePixel()function, e.g.:function evaluatePixel(sample) { return [sample.F1/320] } - use a visualizer or a color visualization function, e.g. valueInterpolate.

Sentinel Hub offers open data (e.g. Sentinel, Landsat), as well as commercial data (e.g. Planet and Maxar). Open data are always served in their full resolution. Each of the collections has its own maximum resolution, which you can check in our documentation (see here for Sentinel-2). What you get for Sentinel-2 in EO Browser is always a full resolution image. If you go to the effects in EO Browser and enable NEAREST upscaling and downscaling, you will see individual pixels displayed, and you can even measure them to confirm that the pixel resolution indeed matches the maximum resolution of the sensor. Users get the same data with free trial and paid packages. Packages differ in commercial use licence, support levels, and what kind of processing you can do on this data, but not on data quality.

If you’re using processing API, you can get full resolution by specifying the maximum resolution the collection offers using the resx/resy parameter. See the full resolution example for Sentinel-2.

If you need higher resolution, you will need a different satellite, that offers higher resolution. If 10 meter resolution that Sentinel-2 offers isn’t enough, you can look into our commercial data, where you can get image resolution of up to 0.5 meters. Note that in order to purchase commercial data, you do need a paid Sentinel Hub subscription first.

Yes, the atmospherically corrected Sentinel-2 L2A data is available globally since 2017. For Europe it is available since 2015. For more information about S2L2A visit our atmospheric correction page and our documentation.

If you find missing tiles in Sentinel Hub, we recommend that you first check whether the data has even been published by the European Space Agency (ESA). The best way to check this is to use the “Search” function in the Copernicus Browser.

There can be several reasons for missing data - for example, there could be an error in the processing chain of the Copernicus ground segment, or it could be debris avoidance. Sometimes there are also problems with the satellite itself (e.g. Sentinel-1B failed completely in December 2021 and the data has been unavailable ever since). If there is an interruption in satellite operation, this is logged in ESA’s Events Viewer. If the data cannot be found there, we recommend that you address the question to ESA.

If the data is present in the Copernicus Browser but missing in the Sentinel Hub, please send us a link to the specific data, including the product identifiers, and we will take a look.

Some areas never have cloud coverage below 20%. This is why in Playground, where we have default setting for 20% of cloud coverage, for some areas seems there is no satellite imagery available. However if we turn slider for maximum cloud coverage to 100%, we can see data available.

We would like to serve data as they are, without uncontrolled changes, because it is almost impossible to set color balance to one fitting all places in the world and all groups of users. You can still tweak contrast in several ways.

You can tweak “Gain” (brightness) to automatically equalize the image.

You can tweak “Gamma” (contrast) as well.

Use the atmospheric correction by using the S2L2A atmospherically corrected data instead of S2L1C. As you can see on the image below, atmospheric correction increases the contrast, as it corrects for the effects of the atmosphere.

Source Data Using the WFS Request

To access metadata of the scene or to see which tiles are available, use the WFS request. Get started by checking out the WFS use examples.

Source Data Using the WFS Request on S3

In case one needs, for a specific purpose, source data used for some scene, he can use a WFS request with the same parameters as for WMS. A response will contain a path attribute, e.g. something along the lines of:

“path”: “s3://sentinel-s2-l1c/tiles/310/S/DG/2015/12/7/0”

It is then possible to access these data from AWS, where they are stored by adding relevant filenames, see typical structure of a typical scene at AWS bellow.

In case you want to download it from outside of AWS network, you can change the address to:

https://sentinel-s2-l1c.s3.amazonaws.com/tiles/10/S/DG/2015/12/7/0

Typical structure of the scene:

aws s3 ls s3://sentinel-s2-l1c/tiles/10/S/DG/2015/12/7/0/

PRE auxiliary/

PRE preview/

PRE qi/

2017-08-27 20:39:26 2844474 B01.jp2

2017-08-27 20:39:26 83913648 B02.jp2

2017-08-27 20:39:26 89505314 B03.jp2

2017-08-27 20:39:26 97645600 B04.jp2

2017-08-27 20:39:26 27033174 B05.jp2

2017-08-27 20:39:26 27202251 B06.jp2

2017-08-27 20:39:26 27621995 B07.jp2

2017-08-27 20:39:26 100009241 B08.jp2

2017-08-27 20:39:26 2987729 B09.jp2

2017-08-27 20:39:26 1218596 B10.jp2

2017-08-27 20:39:26 27661057 B11.jp2

2017-08-27 20:39:26 27712884 B12.jp2

2017-08-27 20:39:26 27909008 B8A.jp2

2017-08-27 20:39:26 134890604 TCI.jp2

2017-08-27 20:39:26 379381 metadata.xml

2017-08-27 20:39:26 166593 preview.jp2

2017-08-27 20:39:49 106056 preview.jpg

2017-08-27 20:39:47 1035 productInfo.json

2017-08-27 20:39:26 1678 tileInfo.json

We put quite a lot of effort into leaving the data as they are, not to modify it. However, this also means that some data mistakes get to our users. One of these is geolocation shift.

There is a nice blog post written by CNES colleague Olivier Hagolle on this topic. We suggest to read it and send a notice to ESA and Copernicus to do something about it

Recommendation: Whenever possible, you should work with the data in the same coordinate system as they are produced in, i.e. UTM (in the same zone!) for Sentinel-2. This will prevent the inaccuracy resulting from reprojections, rounding, etc.

However, if you really want to do it, we recommend the following approach:

- latitude resolution = real world ground distance resolution/ 111226.26

- longitude resolution = real world ground distance resolution/ 111226.26 / cos(lat)

- lat = the latitude of the point at which the conversion needs to take place

Example:

You are at (lat,lon) = (45,10) and want to know how many degrees 10m is

- latitude resolution = 10 / 111226.26 ~= 0.000090

- longitude resolution = 10 / 111226.26 / cos (45 degrees) ~= 0.000127

Notes

- the coordinate systems are complicated, even more so if you go towards the poles

- the above formulas hold for real world distances; projected distances (web mercator, UTM, etc.) are not the same thing and can be complicated to convert. That said, the UTM distance is usually quite close to the real world distance, so the formula for converting between UTM and WGS84 should give reasonable results

- cos(lat) should be perfomed with the latitude in radians, not degrees, so it’s actually cos(latDegrees * PI / 180)

Commercial data

Sentinel Hub currently supports Planet PlanetScope, Planet SkySat Archive and Maxar WorldView/GeoEye commercial data collections. Due to a different licensing model, we cannot provide free access to these data collections. One therefore first has to purchase the quota for any of the constellations and order the image through us (most commonly an on-going monitoring of specific area on daily/weekly/monthly basis). Once this order is processed, we will make the purchased data available through Sentinel Hub services in the same manner as other data.

Note: Before commercial quota can be purchased, a paid Sentinel Hub subscription is required. Free trial does not cover commercial data support.

We prepared several resources for you to get started with commercial data:

- See the available bands and resolution for PlanetScope, SkySat Archive and Maxar WorldView.

- See our Commercial Data Webinar, where you will learn all about commercial data in Sentinel Hub. It goes into detail on where to find all the relevant documentation, how to order data (including time-series data) and visualize it, view statistical information, import data into your own GIS and get it sponsored by the Network of Resources.

- See also our PDF tutorial on how to search, order and visualize commercial data in Requests Builder and Postman.

- See how to search, order and visualize commercial data within EO Browser.

- Learn about the PlanetScope Hectares under management model and how to create a subscription.

- See commercial data samples on the provider’s websites - Planet and Maxar WorldView.

- See our Flickr album for some commercial data examples (not full resolution).

If you’re conducting research or precommercial exploitation, you can get commercial data sponsored by applying to the ESA’s Network of Resources. To help you apply, we have prepared a step by step guide on how to submit the proposal.

Check also other Planet Science Programs available to researchers and students to utilize the high spatial and temporal resolution imagery for high impact science.

Commercial pricing generally depends on the mission you want to purchase (each has its own pricing packages), on the area size in km2 you need and how many acquisitions you need for the same area. The quota cost is calculated as a combination of the sum area in km2 and the number of acquisitions, with each additional acquisition duplicating the cost. Just to illustrate, a Pleiades quota package will cost you 100 euro, giving you 11 km2 to process, which you can use in the following ways:

- 1 order of 11 km2 with 1 acquisition,

- 2 orders of 5.5 km2, each with one acquisition

- 1 order of 5.5 km2 with 2 acquisitions

- 3 orders of 3.6 km2, each with one acquisition

- 1 order of 2.2 km2 with 5 acquisitions

- …

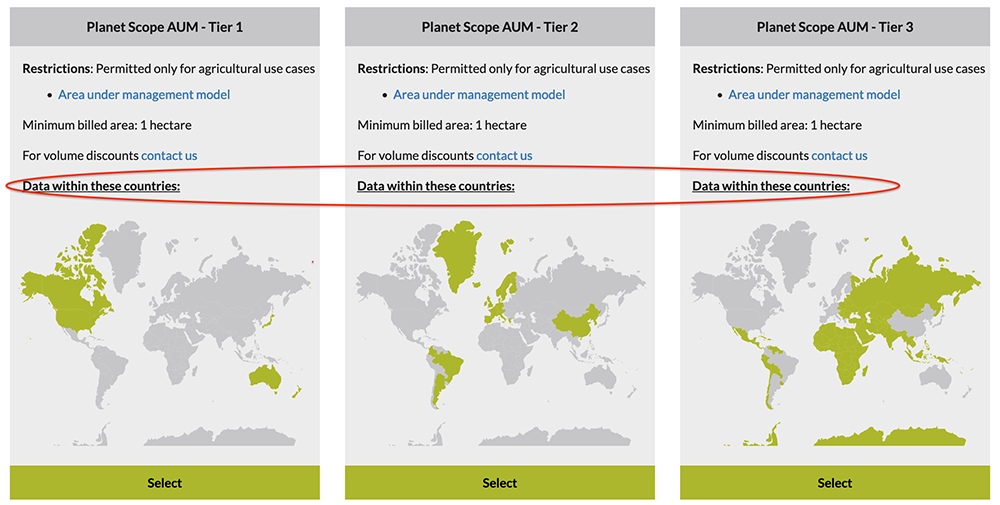

An exception to this model is Planet PlanetScope, which has a Hectares under management model. It means that you subscribe to a parcel, then get all the archive and fresh data for one year for that parcel. 1 quota package includes 100 ha to process, and for this 100 ha the package includes all the available products free of additional charge. Pricing for PlanetScope thus depends on how big of an area you need to monitor (if you need 300 ha, you would buy 3 quota packages), and which of the three tiers you need (this depends on the country where your polygon of interest is located).

Note that commercial products are clipped to your polygon.

See commercial pricing packages here.

Commercial data samples can be found on the data provider websites:

We cannot provide you with a sample of a specific location, as this would mean we would have to purchase this image from the data provider first.

Some examples of full resolution commercial imagery, although the images are not in full size, can be found on our commercial data Flickr album.

Data availability differs between collections. Some collections only acquire imagery when tasked to do so (Planet Skysat and Maxar WorldView), and so your location will have an image only, if someone previously tasked a satellite to capture it. Sentinel Hub offers full data archive to users, but tasking is not yet supported. Planet PlanetScope, on the other hand, captures the whole globe systematically every day.

It is thus possible that no image is available for your polygon at the time of your interest, or that the available image is cloudy. Or, your location could have hundreds of quality images.

It’s best to check for data availability in EO Browser, where you can search archive data and see low resolution previews of products. This is best done before you purchase commercial quota, as we can’t offer refunds. Searching is available to all users with a paid Sentinel Hub subscription, and no API key is needed, so you can check if data you need is there beforehand.

See how to search and order commercial data in EO Browser and Requests Builder.

EUSI Maxar WorldView data are available to customers from the following countries:

Albania, Algeria, Andorra, Armenia, Austria, Azerbaijan, Belarus, Belgium, Bosnia and Herzegovina, Bulgaria, Croatia, Cyprus, Czech Republic, Denmark, Egypt, Estonia, Finland, France, Georgia, Germany, Greece, Hungary, Iceland, Ireland, Israel, Italy, Kosovo, Latvia, Liechtenstein, Lithuania, Luxembourg, Macedonia, Malta, Moldova, Monaco, Montenegro, Morocco, Netherlands, Norway, Poland, Portugal, Romania, San Marino, Serbia, Slovakia, Slovenia, Spain, Sweden, Switzerland, Tunisia, Turkey, Ukraine, United Kingdom, Vatican City.

Customers have access to full and global archive.

In a nutshell

You subscribe to your areas of interst (e.g. agriculture fields) and, for a period of one year, you can access to all available PlanetScope data in that area - both archive and monitoring. This subscription model is perfect for agriculture and other continuous monitoring use-cases.

More details

- The price of the annual subscription depends on the country, where one wants to download the data from. See Billing for more information about Tiers.

- Users can purchase a quota in packages of 500 hectares for each of the Tiers. This quota can be consumed for several dislocated areas (parcels), each area having the minimum size of 1 hectare.

- Subscription period starts at the time of the first purchase and lasts for 12 months since then.

- In case the User requires additional quota, they can purchase it at any time during the year (“Top-up”). Consecutive purchases are co-termed with the original purchase (price is proportional to the remaining period). During the last three months of the suscription period, it is required to subscribe for one additional year.

- In case the User consumes more data than they have subscribed to, there is a 20% overage fee. We therefore kindly recommend to purchase the quota up-front.

- The total “area under management” is defined as “the area of union of all polygons used in the orders within a period of one year”.

Follow up questions

Q: If I order the data for a polygon A today and then once again for the same polygon a week from now. Do I consume quota twice? A: No. As long as you are fetching the data from the same polygon, you will only consume once the area of the polygon.

Q: If I order the data for polygon A today, then increase the polygon a bit (polygon A’). How much quota will I consume? A: As long as the polygon A is fully within polygon A’, you will consume the total area of polygon A’ (“union of polygons” rule)

Q: If I purchase 500 hectares today and another 500 hectares in 6 months, how much will I pay? A: Your first purchase will be for the full price, second one with 50% discount (as it will only cover the period of 6 months).

PlanetScope tiers differ only in pricing, as each package gives you 500 ha of quota. Each tier covers its own set of countries for which you are purchasing data. For example, if you’re interested in ordering data for a polygon in Greece, you need to purchase the second tier, as only the second tier covers Greece.

To check which countries are covered by each tier, check the Data within these countries note under each tier.

When you purchase PlanetScope quota for the first time, we will configure 2 new collections and 2 PlanetScope configurations for you. One collection is for 4-band and one for 8-band data, and at the beginning you will only use one. The two configurations differ only in the collection used. We will also send you an email containing the API key that you will need to enter when making an order.

For PlanetScope, you can order data directly (orders) or subscribe to all the data for a specific area of interest (AOI). For the latter, see the FAQ on PlanetScope subscriptions.

The easiest way to order commercial data for the first time is to do it in EO Browser. Please check out this short video demonstration on ordering Pleiades data in EO Browser, to learn about the general process.

Next, follow the specific steps for ordering PlanetScope data below.

First steps

- Login to EO Browser with your Sentinel Hub account.

- On the map, draw the polygon for which you want to order data using the tools on the right side of the screen.

- Navigate to Commercial data tab on the menu on the left.

- To check your available quota, scroll down and open the My Quotas section.

Search options

Area of interest- after drawing your area of interest on the map, this will tell you its size in km2.FromandTo- select the time-range of your search.Constellation- select Planet PlanetScope.Item type- leave default PSScene.Product bundle- select analytic_8b_sr_udm2 for 8-band data, available only since 2018 (recommended).

- select analytic_sr_udm2 with only 4 bands if you also need earlier data before 2018.

Max. Cloud Coverage- set your desired max cloud coverage.- Click Search to see your search results.

Results

- Look through the search results. By expanding the Show details section under each result, you can find additional data on coverage (how much of your AOI is covered by the tile), snow cover, cloud cover, etc.

- Clicking the magnifying glass icon will show you the preview of the result, when available.

- Add products to your order by clicking add for each one. Note that products will be clipped to your AOI.

- Click the Prepare order button. This will not yet execute the order.

Order options

Order name- give a name to your order.Order type- leave default products to order the products that you clicked add for in the previous steps. If you wish to include all the available products that satisfy your criteria, consider making a PlanetScope subscription instead.Products- the IDs of products that you added from your search results are listed here.Collection ID*- If your selected

Product_bundleabove was analytic_8b_sr_udm2, select the collection called My PlanetScope 8-band data. - If your selected

Product_bundleabove was analytic_sr_udm2, select the collection called My PlanetScope 4-band data.

- If your selected

Order size (approx)- approximate calculation of your order size, which influences the quota used. Order size depends on both the size of your AOI and a number of added products.Order limit (km2)- your personal safeguard, preventing you from creating oversized orders. If your desired order size surpasses this limit, feel free to increase it.Harmonize to- radiometric harmonization of PlanetScope imagery to imagery captured by Sentinel-2 or PS2. You may leave this at default. Learn about the options here.Planet API key- enter the API key we sent you after you purchased quota.- Click Create order - this will move your order to My Orders, subsection created. The order will not be executed yet.

*Note that different bundles are not compatible within the same collection - that is why we have created two collections for you to choose from, based on when the data you’re ordering was taken.

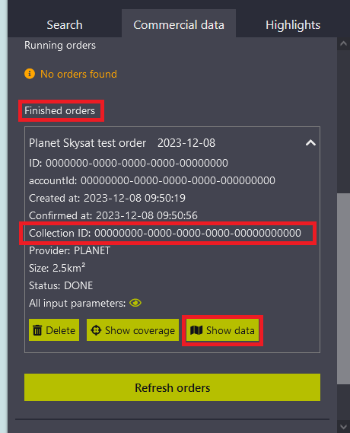

My orders

- In My orders, subsection Created orders (Not confirmed), you have the final chance to review your order. If you want to confirm it, click confirm.

- While the order is being processed, it will appear in the subsection Running orders. Wait for the process to finish and check for status by refreshing. When done, your order will move to the Finished orders subsection.

- In the Finished orders subsection, click show data. This will visualize your ordered PlanetScope data on the map. The layers you see are configured in the PlanetScope configuration we created for you in Dashboard.

- If you have several orders, you can always revisit them and click show data for each one.

For more information on ordering commercial data see the following sources:

When ordering PlanetScope data, users have two main options - Individual Orders and Subscriptions.

What is a Subscriptions API and how does it differ from Order by Query?

When configuring a subscription, the user subscribes to a specific area of interest (polygon), and then gets all the products for that parcel in a specified time range (including an endless time range). This option is especially useful for those interested in an ongoing monitoring of a specific area, such as an agricultural parcel. Subscriptions is similar to Order by Query, but there are several important differences between the two:

- using Subscriptions API, there is no limit in how many products can be ordered, while in Order by Query, the limit is 250 products.

- subscriptions API time range can cover not only past and present dates, but also future dates. A user can thus subscribe to all the data for a specific parcel for the past 5 years, as well as for all data in the future (as long as the commercial subscription is active). Doing so, past data products will be ingested quickly, and future data products will be ingested automatically as soon as available.

Subscription API pricing There is no additional cost for use of this option. PlanetScope data are offered under Hectares under Management, which is priced per area monitored per year anyway (See this FAQ.

How to configure a Subscription?

See documentation on PlanetScope subscriptions, and check the API reference as well. You will need a Sentinel Hub account and PlanetScope quota purchased to order a subscription. If you are conducting research or if you need commercial data for precommercial exploitation, you can get your Sentinel Hub account and PlanetScope quota sponsored by ESA’s Network of Resources.

Subscribing to Planet data is easiest using our Requests Builder. See also our commercial data webinar, where you can see how to make individual orders in Requests Builder (making subscriptions is very similar to PlanetScope Order by Query).

- Set the desired time range. You can set any time range you like, including an open one that only specifies the start time. To get an open subscription, leave the “To” parameter empty (

""To":""). This will extend your description indefinitely. - Set your area of interest.

- Set the maximum allowed cloud coverage and search for data to see the currently available products.

- Specify the name of your subscription.

- Specify a target collection either manually, or by selecting from the dropdown menu. If you want to create a new collection for this purpose, select “create a new collection”. For each subsequent subscription, it is recommended to ingest into an existing collection. Note that only Planet collections with compatible bands will be available to chose from; for example, if your new subscription has

analytic_sr_udm2set as the Product Bundle, only your Planet collections ingested withanalytic_sr_udm2Product Bundle will be offered. - Enter your Planet API key (acquired after purchasing PlanetScope TPDI quota)

- Place your order by clicking the Place Order button. This will not yet execute the subscription. The order status will be CREATED.

- Confirm your order by clicking the Start button. This will start your subscription and its status will change to RUNNING. Note that in case your subscription extends into the future, its status will stay RUNNING until the “To” date passes and until all products are ingested. All the products that are already available will start ingesting right away (might take a few hours if there are many).

- Next, check your Subscription in the Dashboard. Open the 3d Party Data tab (1) and toggle on the Subsriptions pannel (2). Here you will see all your commercial subscriptions listed.

- Opening the Subscription will show the collection ID (green, to be used to access data), order status (blue), geometry (violet) and ingested products, their status (DELIVERED, PROCESSING, DONE or FAILED), as well as the creation date (orange).

Visualize Subscription data

Visualizing commercial subscription data is no different than visualizing commercial data ordered using the Order option. See our webinar on commercial data to learn all about commercial data, including how to visualize it.

Various data collections available through Geocento that are not supported by Sentinel Hub can be easily acquired from Geocento through EarthImages and integrated into Sentinel Hub as Third-Party Data Imports.

Geocento has integrated its data purchasing platform with Sentinel Hub in such a way that you can buy the data there and deliver it to Sentinel Hub for interactive exploration. Follow this step-by-step guide for an easy way to get started.

Step-By-Step Guide

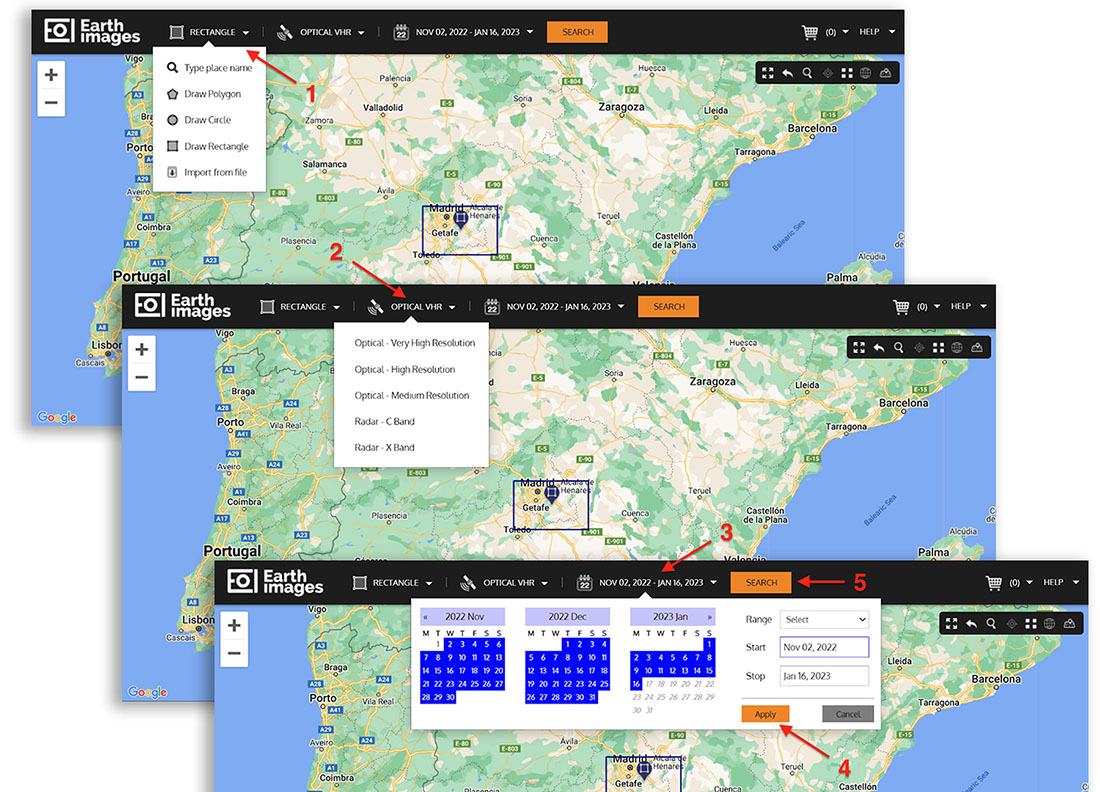

Go to https://imagery.geocento.com and select your area of interest [1] (if not already defined). Select a type of imagery [2] and the time period you are interested in [3]. When you are satisfied with a time selection, click the Apply button [4]. Click Search button [5] and browse the results.

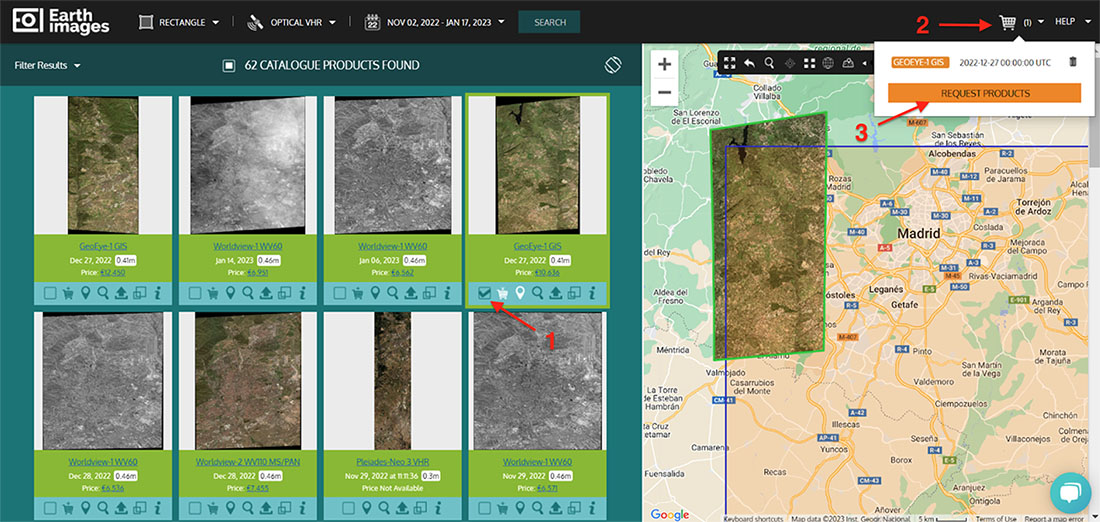

Add imagery to cart [1] and checkout [2,3].

Configure and send your request.

Fill in your information and submit.

Your order will then be created. You will receive a confirmation email.

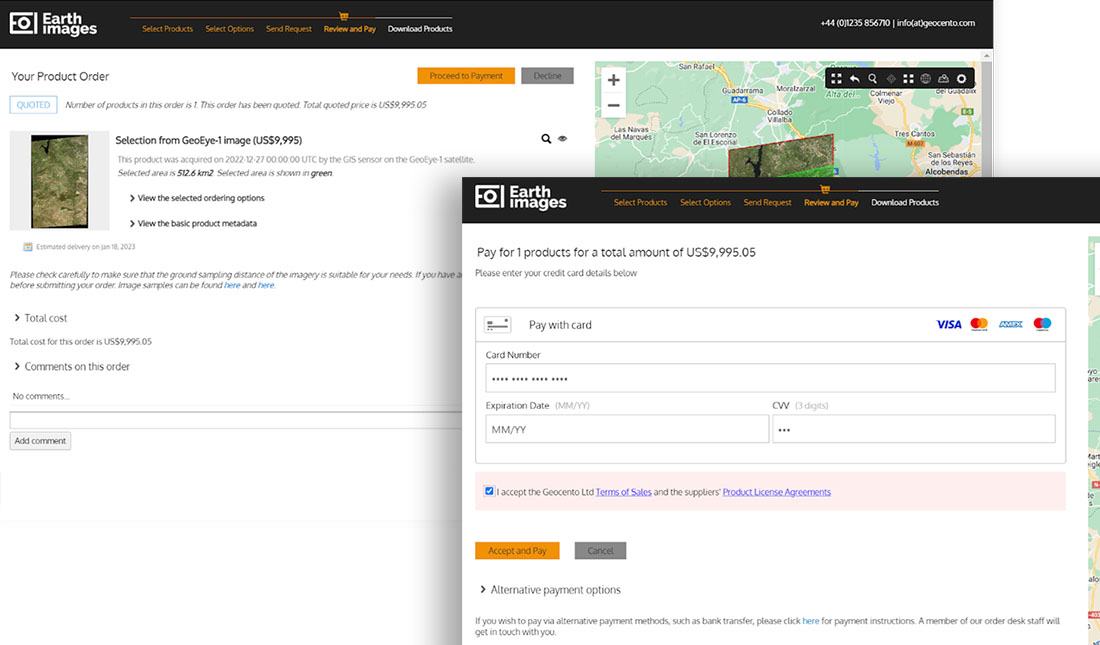

When the request is processed by our order desk, you will receive instructions, e.g. for licence signing or payment. Follow the instructions in the emails.

Once your order has been confirmed, you will be asked to pay via our online payment form. Use the “Request alternative payment” link if you wish to pay by a means other than a credit card.

As soon as your order is paid, the order desk will request the product and deliver it to your order. You will receive an email as soon as the product(s) are ready for download.

You can now go to your order and download the product(s). At the bottom of the page you will find links to import your data into Sentinel Hub.

Click on the link to proceed. The application will ask you for the password to download the order and the login details for the Sentinel Hub OAuth client.

Once you have entered the information, EarthImages will transfer all the products in the order to your Sentinel Hub BYOC collections and tiles will be created automatically.

Go to your Sentinel Hub account to view the newly created collections. Use your Sentinel Hub credentials to log in.

EO Browser

Simply write coordinates in the “Search places” tool in “lat,lon” form, e.g.:

46.0246,14.5367

And click enter

As EO Browser timelapse functionality has a limit of processing up to 300 images at once, we need to do some post-processing, to be able to create longer timelapses. This can be especially useful with data collections like MODIS, with data available all the way back to the year 2000. Take a look at this MODIS timelapse of the Aral sea, dating from 2002 to 2019.

First, use command prompt to convert .gif timelapses from EO Browser into .mp4 files and then merge them together. For example, if we want to create an 18 years long timelapse, six timelapses, each three years long, are required. You should first download your smaller timelapses from EO Browser.

After downloading the gifs from EO Browser, we should transform them into .mp4 files. To do so, we first need to download and install ffmpeg program for making videos. Follow this tutorial to install ffmpeg.

To test if ffmpeg is installed, go to CMD and type: ffmpeg -version.

To transform a gif into mp4, first navigate to the folder where your gif files are stored, then open your command prompt and write in the following code:

ffmpeg -i GIF1.gif -movflags faststart -pix_fmt yuv420p -vf "scale=trunc(iw/2)*2:trunc(ih/2)*2" GIF1vid.mp4

The only part of the code you need to change is the name of your gif at the start and the name of the output .mp4 video at the end.

You need to transform all your .gif timelapses into separate .mp4 files:

ffmpeg -i GIF1.gif -movflags faststart -pix_fmt yuv420p -vf "scale=trunc(iw/2)*2:trunc(ih/2)*2" GIF1vid.mp4<br>

ffmpeg -i GIF2.gif -movflags faststart -pix_fmt yuv420p -vf "scale=trunc(iw/2)*2:trunc(ih/2)*2" GIF2vid.mp4<br>

ffmpeg -i GIF3.gif -movflags faststart -pix_fmt yuv420p -vf "scale=trunc(iw/2)*2:trunc(ih/2)*2" GIF3vid.mp4

Finally, merge all the .mp4 files into a final .mp4 file with the following code:

ffmpeg -i GIF1vid.mp4 -i GIF2vid.mp4 -i GIF3vid.mp4 -filter_complex "[0:0] [1:0] [2:0] concat=n=3:v=1:a=0" FinalVideo.mp4

In this code, we specify the output name of the final .mp4 timelapse and list the .mp4 files we want to merge. We preface the .mp4 files with -i. Then we need to add as many arrays, as there are input .mp4 videos; [0:0] for the first one, [0:1] for the second one, [0:3] for the third one and so on. The n=3 indicates how many input .mp4 files there are.

For 6 input .mp4 files, the code would look like this:

ffmpeg -i GIF1vid.mp4 -i GIF2vid.mp4 -i GIF3vid.mp4 -i GIF4vid.mp4 -i GIF5vid.mp4 -i GIF6vid.mp4 - filter_complex "[0:0][1:0][2:0][3:0][4:0][5:0] concat=n=6:v=1:a=0" FinalVideo.mp4

In EO Browser, you can view two kinds of statistical information for your imagery: a line chart of values through time for either a point or a polygon, and a histogram of values (see the chapter Statistical Analysis on our EO Browser page). To enable statistical features, an evalscript supporting statistical API should be used in your layers.

Because statistics operates on the indicator values themselves (and not on RGB), you must add an additional output to the evalscript, which returns the value without conversion to color. For example, statistics can be calculated for values of B04 or for an index like NDVI. Additionally, the evalscript should include dataMask to exclude no-data values from the calculation (no-data pixels often have value 0, which could skew the statistics), and it’s also recommended to check for presence of clouds, so we can exclude them as well (cloudy pixels usually have very high values, which could also skew the results). The user can exclude any pixels from the statistics, such as for example snow or water.

The evalscript should contain the following outputs, with the exact same names:

default: Mandatory. A visualization output, that defines what will be displayed on the map. The visualization can return anything you like, a single value grayscale, an RGB visualization, etc.index: Optional. Returning the raw values of your index, enabling the histogram functionality. Use FLOAT32 format to include decimals and negative values.eobrowserStats: Mandatory. An output containing both the index values and cloud information, used for line chart generation. Cloud information will allow us to filter the tiles by maximum cloud coverage. Use FLOAT32 format to include decimals and negative values.dataMaskMandatory. A dataMask output to take no-data values into account.

Here is an example evalscript for NDVI:

//VERSION=3

function setup() {

return {

input: ["B04", "B08", "SCL","dataMask"],

//The four outputs should be setup here in the setup function, and defined under the evaluatePixel function.

output: [

{ id: "default", bands: 4 },

{ id: "index", bands: 1, sampleType: 'FLOAT32' },

{ id: "eobrowserStats", bands: 2, sampleType: 'FLOAT32' },

{ id: "dataMask", bands: 1 }

]

};

}

// Optional filtering for clouds using the Sentinel-2A SCL band (values 1, 2, 7, 8, 9, 10, 11 are either clouds or snow)

function isCloud (scl) {

if ([1, 2, 7, 8, 9, 10, 11].includes(scl)) {

return false;

}

}

function evaluatePixel(samples) {

let val = index(samples.B08, samples.B04); // NDVI calculation

let imgVals = null;

const indexVal = samples.dataMask === 1 ? val : NaN; //NDVI index without no-data values

const cloud = isCloud(samples.SCL) //calling in our cloud filtering functon as a cloud variable

//Define the RGB NDVI visualization and write it into imgVals

if (val<-0.5) imgVals = [0.05,0.05,0.05,samples.dataMask];

else if (val<-0.2) imgVals = [0.75,0.75,0.75,samples.dataMask];

else if (val<-0.1) imgVals = [0.86,0.86,0.86,samples.dataMask];

else if (val<0) imgVals = [0.92,0.92,0.92,samples.dataMask];

else if (val<0.025) imgVals = [1,0.98,0.8,samples.dataMask];

else if (val<0.05) imgVals = [0.93,0.91,0.71,samples.dataMask];

else if (val<0.075) imgVals = [0.87,0.85,0.61,samples.dataMask];

else if (val<0.1) imgVals = [0.8,0.78,0.51,samples.dataMask];

else if (val<0.125) imgVals = [0.74,0.72,0.42,samples.dataMask];

else if (val<0.15) imgVals = [0.69,0.76,0.38,samples.dataMask];

else if (val<0.175) imgVals = [0.64,0.8,0.35,samples.dataMask];

else if (val<0.2) imgVals = [0.57,0.75,0.32,samples.dataMask];

else if (val<0.25) imgVals = [0.5,0.7,0.28,samples.dataMask];

else if (val<0.3) imgVals = [0.44,0.64,0.25,samples.dataMask];

else if (val<0.35) imgVals = [0.38,0.59,0.21,samples.dataMask];

else if (val<0.4) imgVals = [0.31,0.54,0.18,samples.dataMask];

else if (val<0.45) imgVals = [0.25,0.49,0.14,samples.dataMask];

else if (val<0.5) imgVals = [0.19,0.43,0.11,samples.dataMask];

else if (val<0.55) imgVals = [0.13,0.38,0.07,samples.dataMask];

else if (val<0.6) imgVals = [0.06,0.33,0.04,samples.dataMask];

else imgVals = [0,0.27,0,samples.dataMask];

// Return the 4 inputs and define content for each one

return {

default: imgVals,

index: [indexVal],

eobrowserStats: [indexVal,cloud?1:0],

dataMask: [samples.dataMask]

};

}

See the script in EO Browser.

The evalscript can be used directly in EO Browser using the custom script function, or setup for the layer within the Sentinel Hub Configuration Utility.

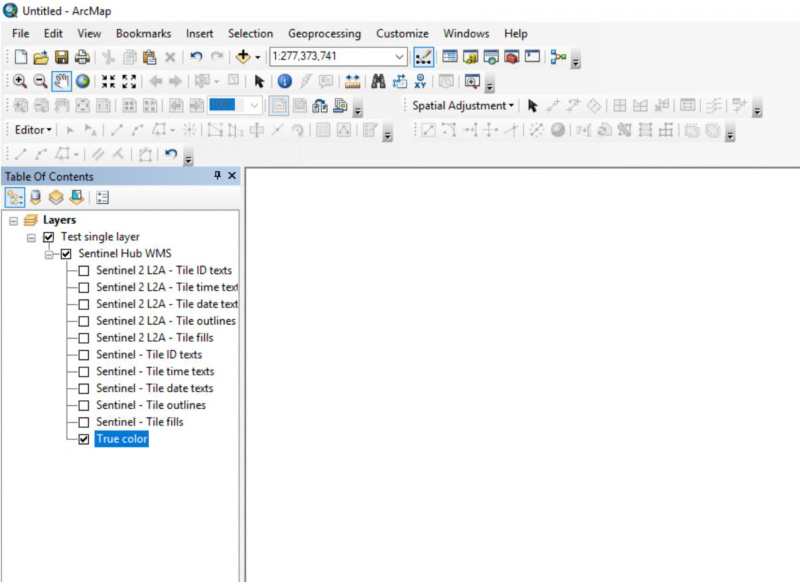

When you create a custom layer, it is possible to select the same layer in EO Browser. See the section 2 of this FAQ to see how to visualize your configuration in EO Browser, or follow the steps below.

- In EO Browser, first select your configuration from the dropdown menu in Themes on the top of the Search tab.

- Select the desired data source (if all layers in your configuration have the same data source, only one will be available).

- Make sure to set the appropriate time range and zoom in to the area, where your data is available. This is especially important for BYOC and TPDI data.

- Click Search and select one of the results.

- Select your layer in the Visualization tab.

- When you have your layer displayed, draw a point or a polygon on the map and inspect the statistics features.

For more information on how to create configurations and layers see our Webinar, and for BYOC collections, see this FAQ.

To view your collection in EO Browser, you first need to create a new configuration with visualization layers.

To follow the steps below, you will need a Sentinel Hub account and a collection ID.

Your collection ID is located in your  tab of the configuration utility, where all your collections with ingested tiles are located.

There are also some public collections available on our GitHub, where the collection ID can be found for each of them.

tab of the configuration utility, where all your collections with ingested tiles are located.

There are also some public collections available on our GitHub, where the collection ID can be found for each of them.

Check out how you can find your collection ID by expanding the GIF demonstration below:

Click on the GIF to expand it.

1. Create a new configuration

1.1. Without a template

- Copy your collection ID to clipboard and go to the

![]() tab.

tab. - Click on the

![]() button.

button. - Choose a name for your configuration and click on the green

![]() button to submit.

button to submit. - When the configuration is created, you will see an empty configuration. Continue to the section 1.3 to create a new layer.

1.2. With a template

BYOC templates with preprepared visualization layers are available for commercial data (PlenetScope) and some publically avaliable collections.

- Copy your collection ID to clipboard and go to the

![]() tab.

tab. - Click on the

![]() button.

button. - Choose a name for your configuration and select the optional configuration template matching your data (e.g. Sentinel-2 L2A 120 m Mosaic).

- When done, click the

![]() button to submit.

button to submit. - When the configuration is created, you will see a couple of layers already prepared. Click the expand arrow

![]() next to each layer title, to see the additional information.

next to each layer title, to see the additional information. - You will see that the source is set to Bring Your Own COG and the Collection ID field displays a string of zeroes. Replace this string with your collection ID for each layer in your configuration.

- Save all your changed layers by clicking on the

![]() button next to each layer.

button next to each layer.

Click on the GIF to expand it.

1.3. Create a new layer

- In your configuration, click on the

![]() button.

button. - Choose the name for your layer and set the source to be Bring Your Own COG.

- Setting the source to Bring Your Own COG will enable the Collection ID field, which will be empty. Enter your collection ID into this field.

- Click on the pencil icon

![]() next to Data Processing, to open the scripting window.

next to Data Processing, to open the scripting window. - For BYOC, no data products are available. You will have to write a visualization script into the window on the right. To do so, you need to know which bands are available for your ingested data and their names. If your data is of the constellation supported by Sentinel Hub (e.g. Sentinel-2, Landsat 8, DEM), you can find band information and example evalscripts on our data documentation and on our custom script repository.

- When your evalscript is inserted, submit it by clicking on the

![]() button. Note that evalscript is a requirement of Sentinel Hub, as it tells the system how to visualize your data.

button. Note that evalscript is a requirement of Sentinel Hub, as it tells the system how to visualize your data. - When done, click on the

![]() button, to save your layer settings.

button, to save your layer settings.

Click on the GIF to expand it.

2. Visualize Your Configuration in EO Browser

- Open EO Browser and click on the login button on the top right, to login to your Sentinel Hub account.

- Expand the dropdown menu located under Theme on top. Find your configuration by name and select it.

- Toggle on the data collections found under Data Sources. For BYOC, the offered data collection will usually match the name of your configuration or visualization layers.

- On the map, zoom to the area where your tiles are ingested and select a time range, that includes the acquisition time of your ingested tiles.

![]()

- If you are unsure what the ingestion dates and locations for your tiles are, check it out on GitHub if you’re working with a public collection, or click on the tile in your collection in the Bring Your Own COG section of the Dashboard. See how to do so here (20:38 - 21:00).

- When done, click the

![]() button.

button. - If your search parameters are set correctly, you should see your tiles listed in search results and they should appear on the map. To select one of your tiles, click the

![]() button.

button. - Your data will be visualized with the layers from your configuration. Feel free to explore them. To learn more about what you can do with your data in EO Browser, visit our EO Browser page.

EO Products

We recommend to check our “EO Products” page as well as Index DataBase, which holds numerous descriptions, along with scientific articles.

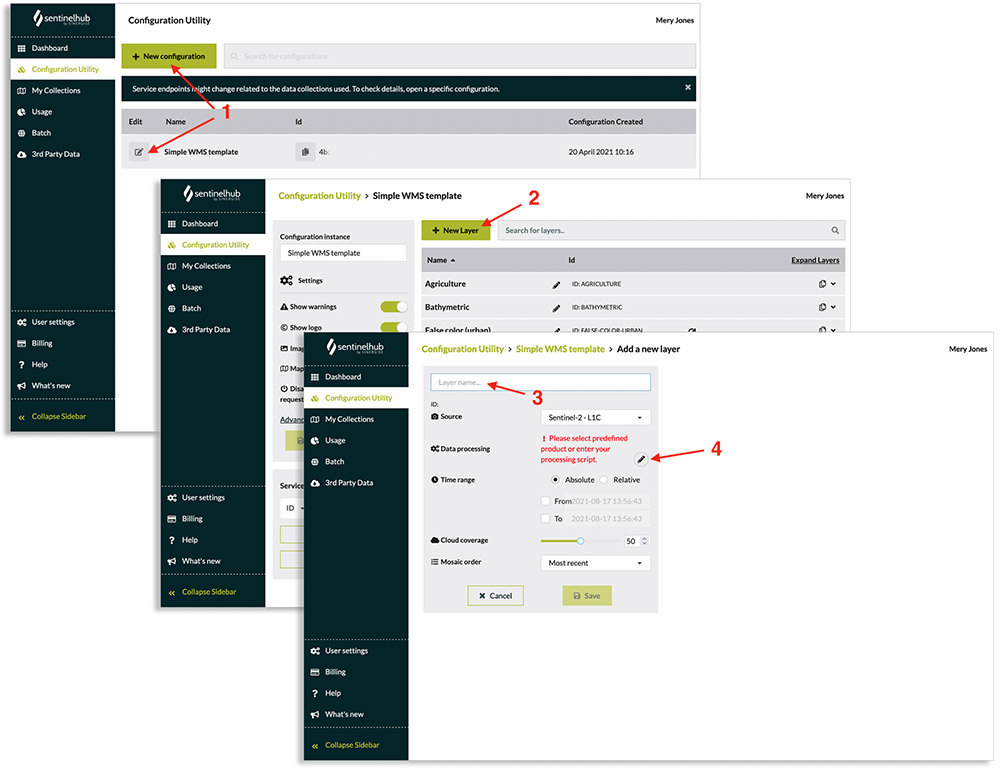

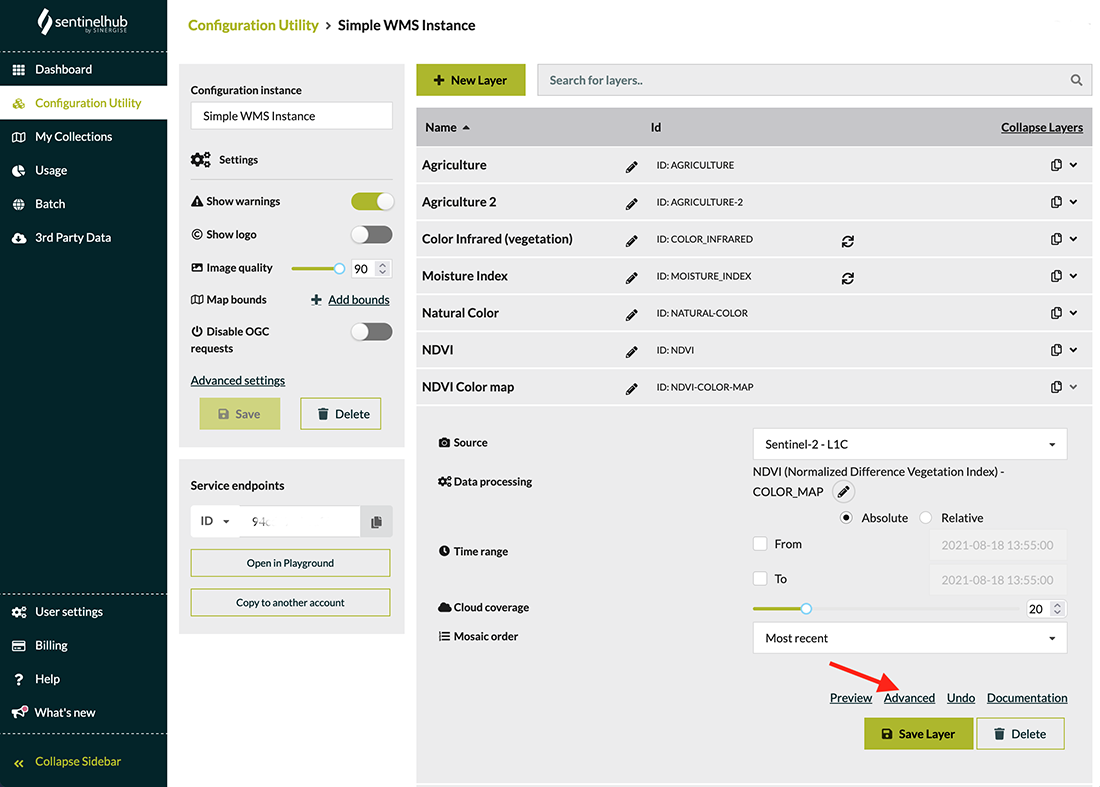

You can easily create a new EO product in the Configuration Utility.

- Create a new configuration or open an existing one.

- Click on “Add new layer”.

- Enter the title of the layer.

- Click the “pencil icon” next to Data Processing option to select a predefined product or enter your processing script.

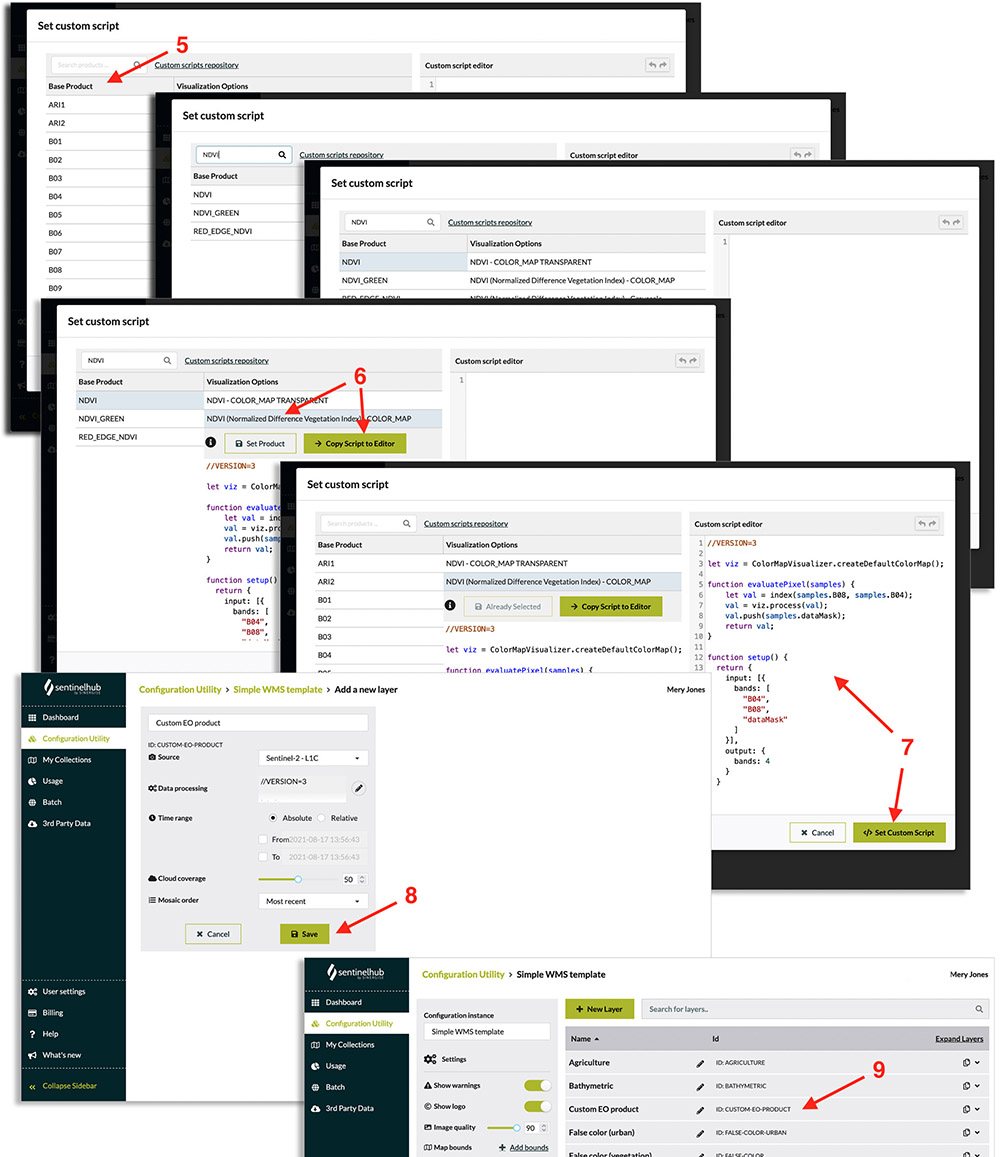

- Choose from the available Base Products. See also the available custom scripts for each data source in our repository.

- Click on the selected product under Visualization Options and the “Copy Script to Editor” button.

- Edit the selected base script in the Custom script editor and click the “Set Custom Script” button when you are done.

- Click “Save” under the newly added layer to complete the creation of a new layer.

- The newly added layer will appear in the list of layers under your configuration.

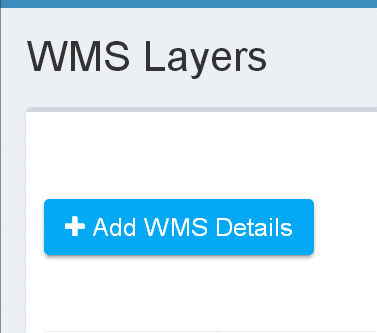

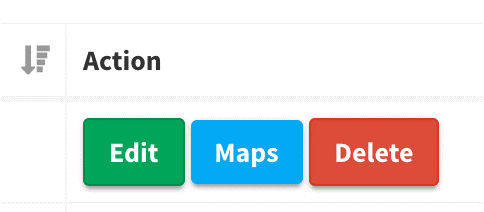

For detailed information about WMS configurator click here.

Read also more information about custom scripting.

To learn more about how to create a new configuration, a new layer with data products and a new layer with a custom script from the repository, watch our OGC API with QGIS integration webinar.

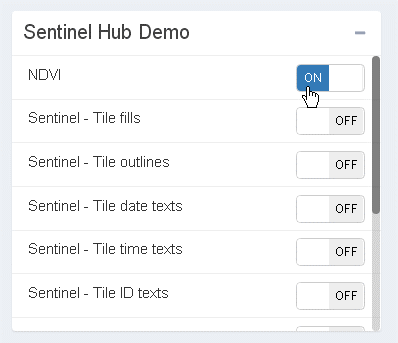

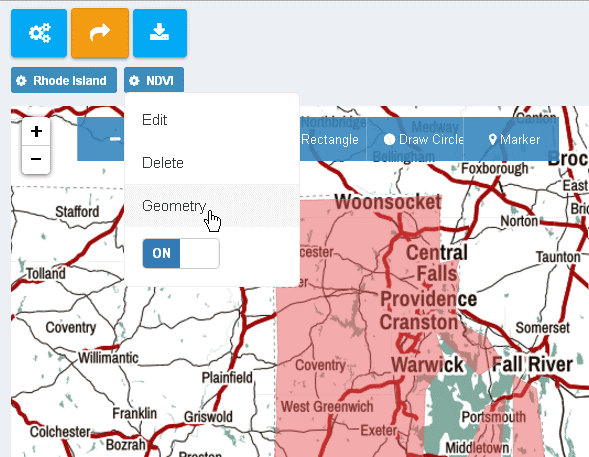

There are several ways you can view the Normalized Difference Vegetation Index (NDVI).

You can view it directly in EO Browser, which provides the NDVI visualization as one of the default visualizations.

You can create a new NDVI layer yourself with custom modifications in Configuration Utility, and view it in EO Browser after logging in with your credentials. Read more about creating a new layer and visit our custom scripts repository for more variations of NDVI.

You can also integrate the NDVI layer with the WMS in your own application. Read more about the WMS request, OGC example requests and watch our OGC API with QGIS integration webinar.

When user selects “EO Product template”, she can see the Custom script behind each visualization/processing to make it as transparent as possible on what is happening with the data. We are changing these configurations through time, adding new ones and improving existing ones. For those, who want to ensure that processing of their layers is not changed any more, they can simply edit the original Custom script and gain full control over it.

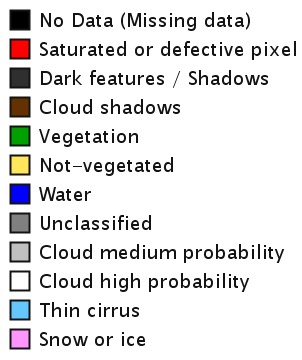

You can get information from scene classification layer produced by Sen2Cor, for data where S2A is available. Data can be retrieved by identifier “SCL” (e.g. instead of return [B02]; for blue color one can use return [SCL/10];).

Data can be then used for e.g. validation of the pixel value, e.g. along the lines:

var scl = SCL;

if (scl == 0) { // No Data

return [0, 0, 0]; // black

} else if (scl == 1) { // Saturated / Defective

return [1, 0, 0.016]; // red

} else if (scl == 2) { // Dark Area Pixels

return [0.525, 0.525, 0.525]; // gray

} else if (scl == 3) { // Cloud Shadows

return [0.467, 0.298, 0.043]; // brown

} else if (scl == 4) { // Vegetation

return [0.063, 0.827, 0.176]; // green

} else if (scl == 5) { // Bare Soils

return [1, 1, 0.325]; // yellow

} else if (scl == 6) { // Water

return [0, 0, 1]; // blue

} else if (scl == 7) { // Clouds low probability / Unclassified

return [0.506, 0.506, 0.506]; // medium gray

} else if (scl == 8) { // Clouds medium probability

return [0.753, 0.753, 0.753]; // light gray

} else if (scl == 9) { // Clouds high probability

return [0.949, 0.949, 0.949]; // very light gray

} else if (scl == 10) { // Cirrus

return [0.733, 0.773, 0.925]; // light blue/purple

} else if (scl == 11) { // Snow / Ice

return [0.325, 1, 0.980]; // cyan

} else { // should never happen

return [0,0,0];

}

Please note that it makes sense to use this layer only on full resolution as any interpolation based on classification codelist will not produce reasonable results. You should also use NEAREST upsampling/downsampling setting, which you can find in “Advanced layer editor”.

Also please note that this map does not constitute a land cover classification map in a strict sense, its main purpose is to be used internally in Sen2Cor in the atmospheric correction module to distinguish between cloudy pixels, clear pixels and water pixels.

An example of Blinnenhorn, Switzerland (October 14, 2017):

Image Manipulation

If you want to get float values out of the service, you will have to use 32-bit float image type (as uint8 and uint16 types only support integers). A custom script which will return NDVI values for Sentinel-2 data could be:

//VERSION=3

function setup() {

return{

input: [{

bands: ["B04", "B08"]

}],

output: {

id: "default",

bands: 1,

sampleType: SampleType.FLOAT32

}

}

}

function evaluatePixel(sample) {

let ndvi = (sample.B08 - sample.B04) / (sample.B08 + sample.B04)

return [ ndvi ]

}

You can either save this in Configuration utility or you can pass it as EVALSCRIPT parameter. For the latter the example of the call is:

https://services.sentinel-hub.com/ogc/wms/<INSTANCE_ID>?service=WMS&request=GetMap&layers=MY_LAYER&format=image/tiff&maxcc=20&time=

2015-01-01%2F2017-12-05&height=512&width=512&srs=EPSG%3A3857&bbox=1817366.784508351,

5882593.696827164,1819812.7694134766,5885039.681732289&EVALSCRIPT=dmFyIG5kdmkgPSAoQjA4LUIwNCkvKEIwOCtCMDQpOw0KcmV0dXJuIFtuZHZpXTs=

We suggest to also check the FAQ about details of internal calculation.

If one wants to have pixels transparent (or semi-transparent), the following can be done:

- format=image/png (note that PNGs are larger than JPGs, which might affect download speed)

- custom script output needs to have 4 channels, fourth one being alpha, e.g. "

return[1,0,0,0.5]" for semi-transparent red pixel or "return[0,0,0,0]" for full transparency

E.g. if one wants to have a Hollstein's cloud overview layer shown in a way, that everything except clouds is transparent, she just needs to change

let naturalColour = [B04, B03, B02].map(a => gain * a);

let CLEAR = naturalColour;

let SHADOW = naturalColour;

let WATER = [0.1,0.1,0.7];

let CIRRUS = [0.8,0.1,0.1];

let CLOUD = [0.3,0.3,1.0];

let SNOW = [1.0,0.8,0.4];to

let naturalColour = [0,0,0,0];

let CLEAR = naturalColour;

let SHADOW = naturalColour;

let WATER = [0.1,0.1,0.7,1];

let CIRRUS = [0.8,0.1,0.1,1];

let CLOUD = [0.3,0.3,1.0,1];

let SNOW = [1.0,0.8,0.4,1];Note that all other outputs need to be 4-channel ones as well.

Instead of WIDTH and HEIGHT parameters one can use RESX and RESY.

E.g. if one adds “RESX=10m&RESY=10m”, the image will be returned in 10m resolution.

REFLECTANCE is physical property of surfaces, equivalent to the ratio of reflected light to incident light (source), with typical values ranging from 0-1. It requires a 32-bit TIFF floating format. To get original reflectance data from the satellite, use the advanced evalscript and set sampleType to FLOAT32. See the evalscript example for a single grayscale band below:

//VERSION=3

function setup() {

return {

input: [{

bands: ["B04"]

}],

output: {

bands: 1,

sampleType: "FLOAT32"

}

};

}

function evaluatePixel(samples){

return [samples.B04]

}

To get the exact original reflectance values, the user must also make sure that the pixels outputted by Sentinel Hub are at exactly the same position and exactly the same size as in the original data. To do so, the users must ensure to:

- Request a bounding box, which is aligned with the grid in which satellite data is distributed.

- Request the same resolution as in the original data (note that different bands of the same satellite can have different resolutions. To check the resolutions for each band, see our data documentation.

We suggest to also check the FAQ about how the values are calculated and returned in Sentinel Hub.

There are several options, depending on what you would like to do.

OGC services

- You can use the WFS service with the same parameters as used in your WMS request (e.g. date, cloud coverage). You will get a list of features representing scenes fitting the criteria.

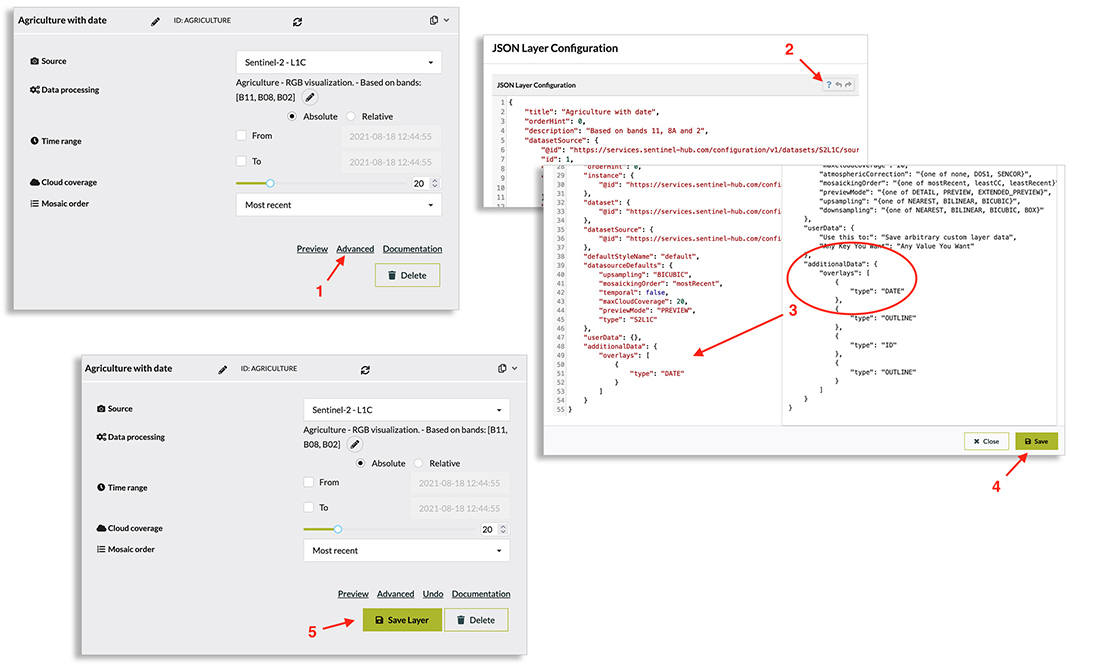

- You can configure the layer to show acquisition dates on images returned from WMS service. Go to the layer of your choice and follow the steps:

- Click the “Advanced” option in the Layer tab to enter advanced parameters dialogue.

- (optional) You can turn on Help by clicking on “?” top right.

- Add “additionalData” node with type “Date”

- Click Save in Advanced parameters dialogue.

- Click Save Layer and layer’s tab.

Catalog API

- Make a request to Catalog API where you set

distinctparameter todatealong with setting bounding box, timespan and collection for which you want to get the acquisition dates (see example).

Grid of solar zenith angles and grid of solar azimuth angles are accessible by names sunZenithAngles and sunAzimuthAngles, respectively, and can be used in a similar way as band values, e.g.: